In the world of business, data has always been a valuable asset. For decades, companies have relied on Data Analysis (DA) and Business Intelligence (BI) to look in the rearview mirror, compiling reports to understand what happened last quarter, last month, or yesterday. This traditional approach, while useful, is fundamentally retrospective. But a seismic shift is underway. Artificial Intelligence (AI) is not just augmenting these fields; it’s completely rewriting the rulebook, transforming them from a static historical record into a dynamic, forward-looking guidance system.

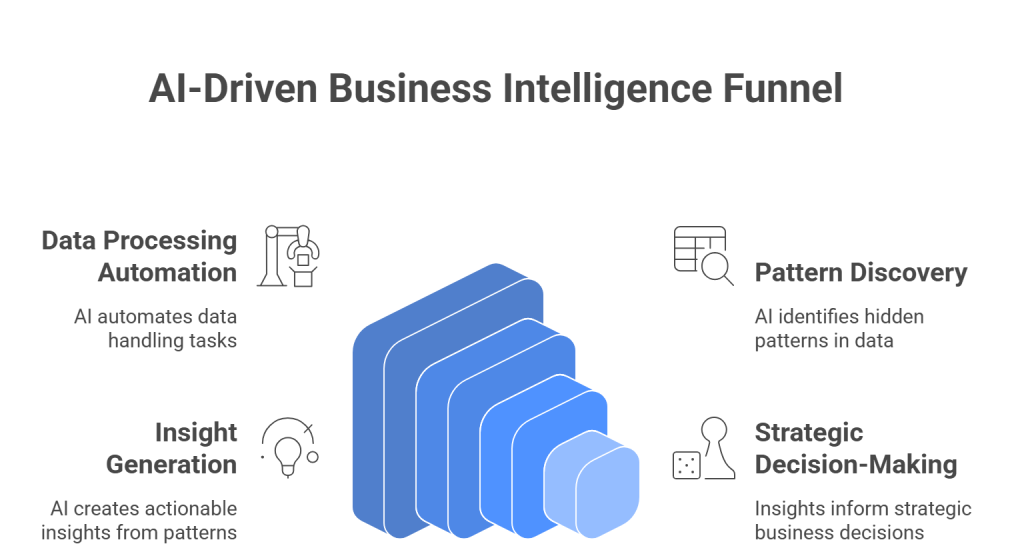

The integration of AI into DA and BI is creating a new intelligence nexus, a point where machine learning, predictive modeling, and natural language processing converge to unlock unprecedented value from data. This isn’t about slightly faster reports or fancier dashboards. It’s about automating the entire analytics lifecycle, making smarter strategic decisions, and empowering every single person in an organization to engage with data directly.

This article explores this revolution. We’ll dive into how AI is reshaping the foundations of data analysis, what core AI techniques are driving this change, and how the user experience is evolving to make data accessible to everyone. We will also navigate the significant challenges and ethical considerations that come with this power, and look ahead to the future of AI-powered analytics.

Section 1: Redefining the Analytical Landscape from the Ground Up

The journey from raw data to actionable insight is a complex one. Traditionally, this process was slow, manual, and confined to a small team of specialists. AI is changing everything, starting with the most foundational, and often most painful, steps of the process.

Automating the Foundation: Data Preparation and Feature Engineering

Ask any data analyst, and they’ll tell you that a huge portion of their time—often reported to be as much as 80%—is spent not on analysis, but on data preparation. This involves extracting, cleaning, and structuring data to make it usable. It’s tedious, time-consuming, and prone to human error.

AI excels at automating these tasks:

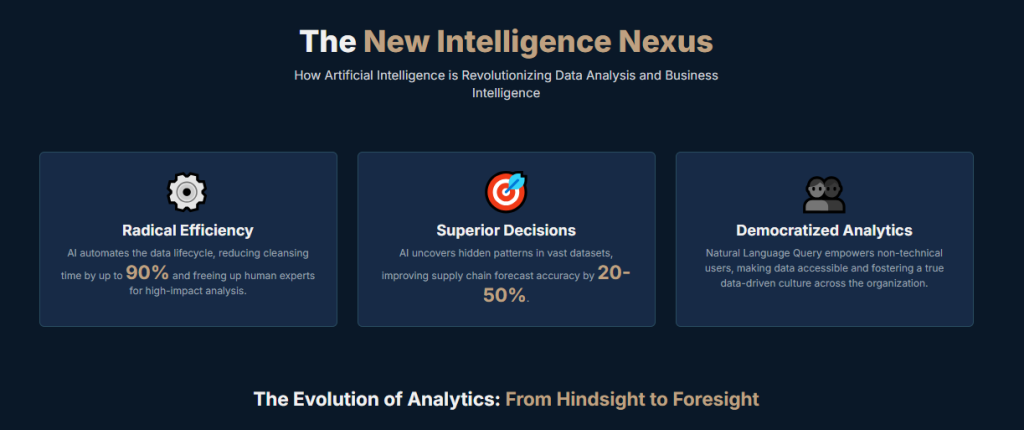

- Intelligent Data Cleansing: AI algorithms can automatically profile data to identify inconsistencies. They can handle missing values not by simply deleting them, but by using machine learning models to predict and fill in the most probable values based on existing patterns.

- Advanced Duplicate Detection: AI uses techniques like fuzzy matching to identify records that are semantically similar but not identical (e.g., “John Smith” vs. “J. Smith”) and merge them, creating a reliable single source of truth.

- Automated Feature Engineering: This is the art of creating new input variables (features) from raw data to improve the accuracy of predictive models. AI can automatically generate and test thousands of potential features, discovering predictive relationships that a human analyst might never find. The Deep Feature Synthesis (DFS) algorithm, for example, has been shown to outperform a majority of human teams in data science competitions.

However, this automation comes with a critical warning: the “Garbage In, Gospel Out” risk. If an AI is trained to clean data that contains systemic historical biases, it may learn to standardize and amplify those flaws rather than correct them. This makes human oversight and the principles of Explainable AI (XAI) essential, even at these early stages.

From Description to Prescription: The Four Tiers of Analytics

AI enhances every level of analytical maturity, allowing businesses to move from simply looking at the past to actively shaping the future. This progression is best understood through four distinct tiers of analytics.

- Descriptive Analytics (“What happened?”): This is traditional BI. AI supercharges it by analyzing vast amounts of both structured (sales numbers) and unstructured (customer reviews, social media comments) data in real-time.

- Diagnostic Analytics (“Why did it happen?”): AI rapidly sifts through countless variables to identify the root causes and hidden correlations behind an event, providing answers in minutes instead of days.

- Predictive Analytics (“What might happen next?”): This is where AI truly shines. By training on historical data, machine learning models can forecast future outcomes with incredible accuracy, from predicting customer churn to forecasting supply chain disruptions.

- Prescriptive Analytics (“What should we do about it?”): The ultimate goal. Prescriptive models take the forecasts from the predictive tier, simulate various potential actions, and recommend the optimal course of action to achieve a desired outcome.

| Analytics Type | Core Question | Traditional Approach | AI-Powered Enhancement |

| Descriptive | What happened? | Static reports, manual data aggregation. | Automated analysis of vast data; real-time dashboards. |

| Diagnostic | Why did it happen? | Manual drill-downs, human-led root cause analysis. | Rapid identification of causal factors using ML. |

| Predictive | What will happen? | Statistical modeling on limited datasets. | High-accuracy forecasting using advanced ML models. |

| Prescriptive | What should we do? | Human-based scenario planning and intuition. | Data-driven recommendations and optimization. |

Section 2: The New Conversational Experience: Democratizing Data for Everyone

Perhaps the most profound impact of AI on business intelligence is the complete transformation of the user experience. The era of complex menus and needing to know a query language like SQL is ending. AI is making data interaction as simple as having a conversation.

Talk to Your Data with Natural Language Query (NLQ)

Natural Language Query (NLQ) is a game-changing technology that allows users to ask questions of their data in plain, everyday language. Instead of building a complex report, a marketing manager can now simply type or ask:

- “What were our top five campaigns by ROAS on each platform for the last 30 days?”

- “Show me the sales trend for our new product line in the UK, and compare it to Germany.”

The AI-powered BI system understands the intent behind the question, translates it into a formal database query, retrieves the data, and presents the answer, often as an interactive chart or graph. This single capability is the primary driver behind the democratization of analytics, breaking down technical barriers and empowering non-specialist business users to explore data for themselves.

Get the Story Behind the Data with Natural Language Generation (NLG)

If NLQ is about asking questions, Natural Language Generation (NLG) is about getting the answers in a narrative format. An NLG-enhanced system doesn’t just show you a chart; it tells you the story behind it.

For example, alongside a line chart showing a spike in revenue, an NLG module might automatically generate a concise summary:

“In Q3 2025, total revenue increased by 12% to £5.4 million, primarily driven by a 30% surge in sales for the ‘X-1’ product line in the London region. However, this growth was partially offset by a 5% decline in the Scottish market, which requires further investigation.”

This synergy between NLQ and NLG creates a powerful, intuitive cycle of discovery. A user asks a broad question, receives a visualization with a narrative summary that points out an interesting anomaly, and then asks a follow-up question to drill down further. The BI tool becomes less of a static repository and more of an active partner in the analytical process.

Section 3: Navigating the Future: Challenges, Ethics, and the Road Ahead

While the benefits are transformative, the path to AI adoption is not without its challenges. Realizing the full potential of this new intelligence nexus requires navigating practical hurdles and profound ethical considerations.

Implementation Hurdles and the “Black Box” Problem

Organizations often face significant obstacles on their AI journey. The most common include:

- Data Quality: The “garbage in, garbage out” principle is absolute. AI models are only as good as the data they are trained on. Siloed, inconsistent, and incomplete data is the single biggest barrier to success.

- High Costs & Talent Shortage: Implementing enterprise-grade AI requires significant investment in technology and infrastructure. Furthermore, there is a global shortage of talent with the hybrid skills needed to build and manage these systems effectively.

- The ‘Black Box’ Problem: Many advanced AI models operate as “black boxes,” meaning their internal decision-making processes are opaque and uninterpretable. This erodes trust and makes it difficult to diagnose errors or biases.

- Algorithmic Bias: If an AI is trained on biased historical data, it will learn and amplify those biases. This can lead to unfair and discriminatory outcomes, such as AI recruiting tools that penalize female candidates or loan algorithms that are biased against minorities.

The Rise of Responsible AI and Explainable AI (XAI)

In response to these challenges, the field of Explainable AI (XAI) has emerged as a critical discipline. XAI encompasses methods and techniques that make the decisions of AI models transparent and understandable to humans. It is the core component of a broader Responsible AI framework.

XAI isn’t just an ethical “nice-to-have”; it’s a core business enabler. By providing transparency, XAI builds the trust necessary for business leaders to act on AI-generated recommendations. It allows developers to identify and mitigate bias, debug models when they fail, and ensure compliance with regulations.

The Future is Ambient Intelligence

Looking ahead, the trend is moving toward a state of “ambient intelligence.” The BI tool as a separate destination will begin to disappear. Instead, AI-driven insights will be embedded directly into the applications and workflows where people do their jobs.

A project manager won’t have to run a report; an AI agent within their project management software will proactively alert them to a potential budget overrun. A sales leader won’t have to build a dashboard; an AI agent within their CRM will flag a stalling deal and recommend the next best action. The BI platform will become the powerful, centralized “brain,” but the intelligence will be everywhere, seamlessly integrated into the fabric of daily work.

Conclusion: The Strategic Imperative

The integration of Artificial Intelligence into data analysis and business intelligence represents a fundamental paradigm shift. It is elevating analytics from a retrospective reporting function to a proactive, predictive, and prescriptive strategic asset. Companies that successfully harness this new intelligence nexus will not only optimize their current operations but will also be positioned to innovate, adapt, and lead in an increasingly data-centric world. Adopting AI is no longer a discretionary investment; it is a core strategic imperative for survival and growth.