up to 300% improvement LLM with Key to Unlocking Prompt

Large Language Models (LLMs) have revolutionized the way we interact with artificial intelligence. These powerful models can generate human-quality text, translate languages, write different kinds of creative content, and answer your questions in an informative way.However, the quality of output you get from an LLM is heavily dependent on the quality of the input you provide. This is where prompt engineering comes in.

Did you know that small tweaks to your prompts can unlock HUGE potential in Large Language Models (LLMs)? It’s true! By mastering the art of prompt engineering, you can significantly enhance the accuracy, creativity, and overall performance of these powerful AI tools. 🧠

Here’s the secret sauce:

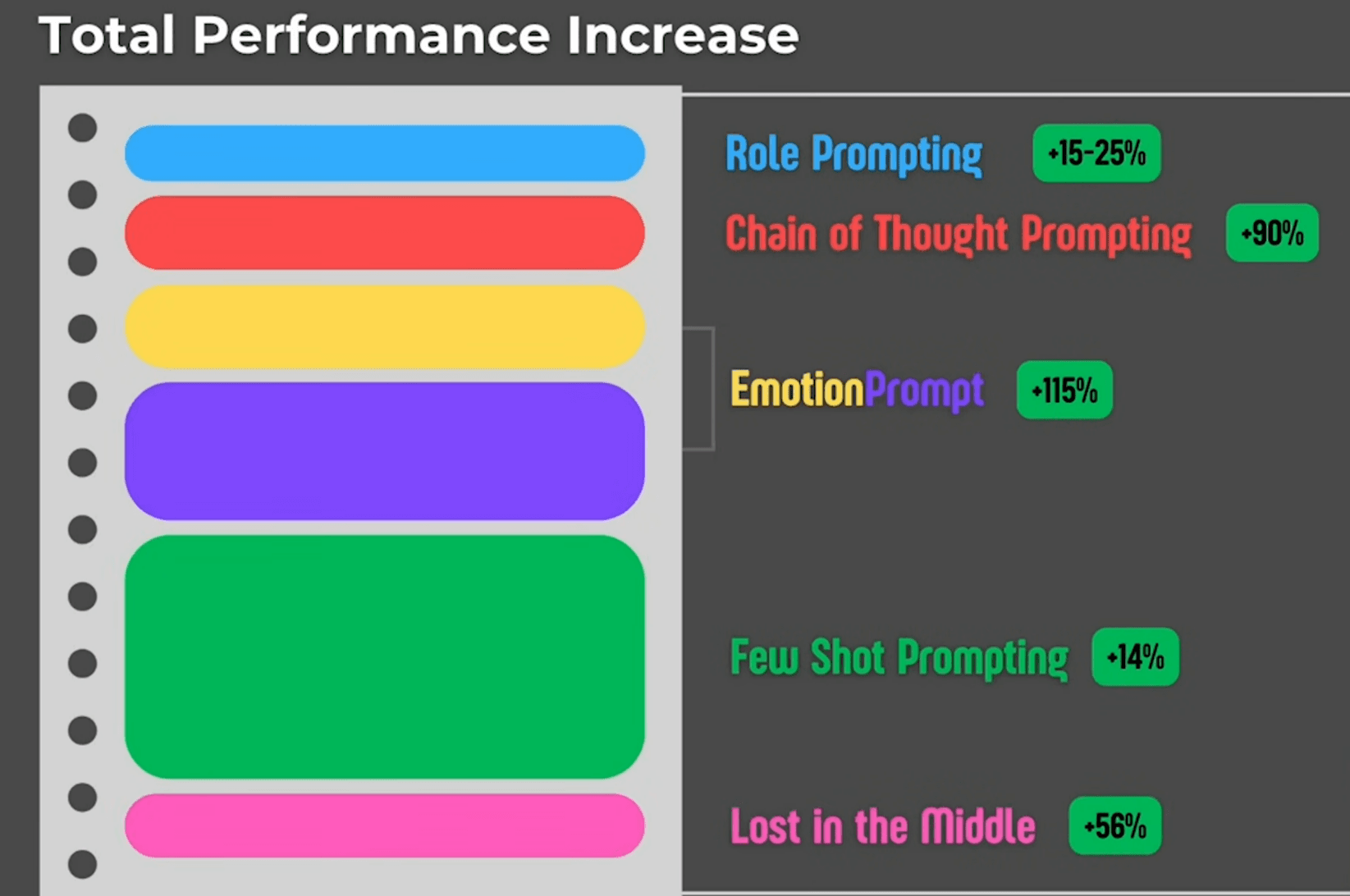

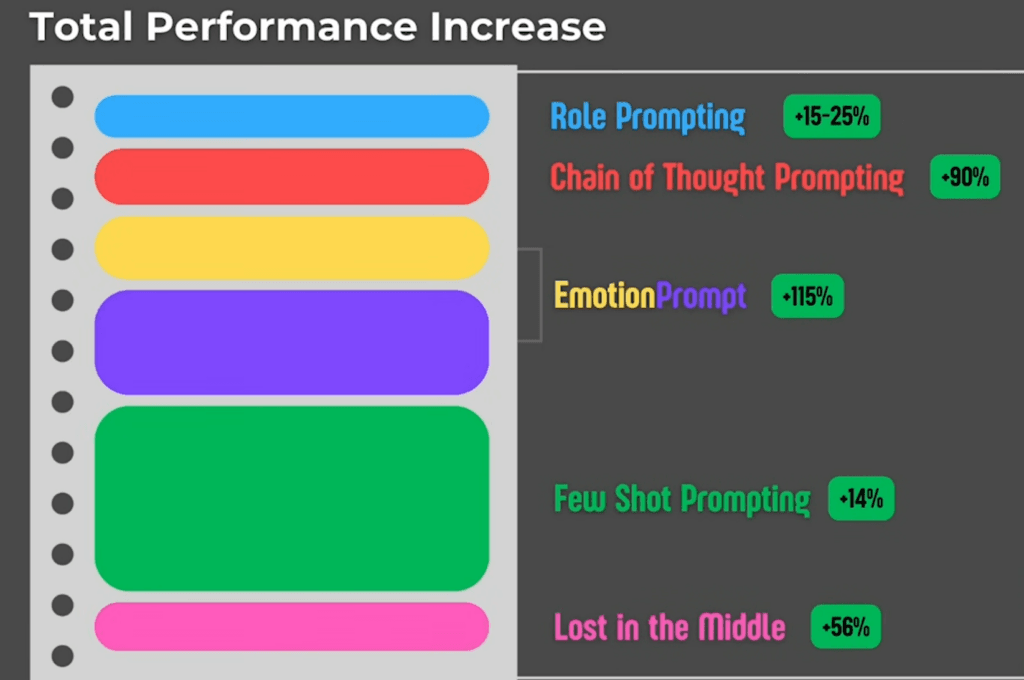

☑️ Role Prompting: Tell the LLM what role to play! Want a poem? Ask it to be a poet. Need code? Instruct it to be a programmer. 🎭

☑️ Shot Prompting: Give examples! Show the LLM exactly what you want by providing input-output pairs. The more examples, the better it understands. 🧑🏫

☑️ Chain-of-Thought Prompting: Encourage the LLM to think step-by-step. This leads to more logical and accurate results, especially for complex tasks. 🧩

☑️ Perfect Prompt Template: Follow a structure! Start with context, define your goal, specify the format, break down the task, and provide examples. 🏗️

Prompt engineering is the art and science of crafting effective prompts to guide LLMs toward generating the desired output. By providing clear, concise, and specific instructions, you can significantly enhance the performance of LLMs and unlock their full potential. This article will explore various prompt engineering techniques and best practices to help you achieve significant LLM improvement.

Section 1: Understanding the Basics of Prompt Engineering

What is a Prompt?

A prompt is essentially an instruction or a set of instructions you give to an LLM to guide its response. It can be a question, a statement, a piece of code, or even a combination of these. The quality of your prompt directly impacts the quality of the LLM’s output. A well-crafted prompt provides the LLM with the necessary context and information to understand your request and generate a relevant and accurate response.

Why is Prompt Engineering Important?

Prompt engineering is crucial because it bridges the gap between human intention and machine interpretation. LLMs are trained on massive datasets of text and code, but they still require clear instructions to understand what you want them to do. By providing specific and well-structured prompts, you can:

- Improve accuracy: Guide the LLM towards generating more accurate and relevant responses.

- Enhance creativity: Encourage the LLM to produce more creative and diverse outputs.

- Increase efficiency: Reduce the time and effort required to get the desired results.

- Unlock new capabilities: Explore and utilize the full potential of LLMs for various tasks.

Section 2: Effective Prompt Engineering Techniques

1. Role Prompting

Role prompting involves assigning a specific role to the LLM within the prompt. For example, you could start your prompt with “You are a helpful and informative assistant” or “You are a world-renowned chef.” By assigning a role, you provide the LLM with context and guide it towards adopting a specific persona or expertise, which can lead to more relevant and accurate responses.

2. Shot Prompting

Shot prompting is a technique where you provide the LLM with examples of the desired input-output structure. There are different types of shot prompting:

- Zero-shot prompting: This involves giving a command without any specific structure. For example, “Translate this sentence into Spanish: The cat sat on the mat.”

- One-shot prompting: This involves providing a single example of the desired interaction. For example, “Translate this sentence into Spanish: The cat sat on the mat. Example: El gato se sentó en la alfombra.”

- Few-shot prompting: This involves providing multiple examples to further refine the AI’s understanding of the expected output.

3. Chain-of-Thought Prompting

Chain-of-thought prompting encourages the LLM to explain its reasoning step-by-step, which can lead to more accurate results, especially for tasks requiring logic and reasoning. This can be achieved through:

- One-shot Chain-of-Thought Prompting: Providing an example with a step-by-step explanation.

- Zero-shot Chain-of-Thought Prompting: Using the phrase “let’s think step-by-step” to prompt the AI to articulate its reasoning.

4. Perfect Prompt Template

A well-structured prompt can significantly improve the LLM’s performance. Consider using the following template for crafting effective prompts:

- Context: Provide background information and set the stage for the task.

- Specific Goal: Clearly state the desired outcome.

- Format: Define the structure of the expected response (e.g., bullet points, paragraph, code).

- Task Breakdown: Divide complex tasks into smaller, more manageable steps.

- Examples: Provide illustrations of the desired input-output relationship.

Section 3: Advanced Techniques and Best Practices

1. Fine-tuning Parameters

Beyond the prompt itself, you can further refine the output by adjusting advanced parameters within the LLM settings. These parameters include:

- Temperature: Controls the randomness of the output. Higher values lead to more creative but potentially less accurate responses, while lower values promote consistency and predictability.

- Stop Sequences: Define specific characters or phrases to signal the AI to stop generating text, ensuring concise and controlled outputs.

- Top P: This parameter acts as a filter, determining the range of words the model can select from during text generation.

- Frequency and Presence Penalty: These settings help avoid repetition, promoting the use of diverse vocabulary in the output.

2. Iterative Refinement

Prompt engineering is an iterative process. It’s essential to experiment with different prompt variations and parameter settings to identify the combination that yields the most desirable results. Continuously evaluate and refine your prompts to unlock the full potential of LLMs.

3. Best Practices

- Be clear and concise: Avoid ambiguity and use precise language.

- Provide context: Give the LLM the necessary background information.

- Specify the desired format: Tell the LLM how you want the output to be structured.

- Break down complex tasks: Make it easier for the LLM to understand and execute your request.

- Use examples: Show the LLM what you’re looking for.

- Experiment and iterate: Don’t be afraid to try different approaches and refine your prompts based on the results.

Conclusion

Prompt engineering is a powerful tool that can significantly improve the performance of LLMs. By crafting effective prompts and utilizing advanced techniques, you can unlock the full potential of these models and achieve remarkable results. Remember, prompt engineering is an ongoing process of learning and experimentation. As you gain more experience, you’ll be able to craft increasingly sophisticated prompts that generate high-quality outputs tailored to your specific needs.